One of the most common problems photographers face is taking pictures with both a very bright and a very dark part in the image. In these cases, we usually end up sacrificing the brightest or the darkest part, leading to disappointing results. There is a technique, however, which can work out this problem, and it is called High Dynamic Range Imaging (HDRI).

A beginner might underestimate the problems posed by situations like these, because human eye adapts itself automatically (by changing the pupils diameter) to any lighting condition. So, when we look at something dark, our pupils dilate, allowing us to see it clearly; whilst when looking at something bright our pupils shrink, letting less light thorough, permitting an optimal vision, as well. This is not the case when taking a picture. The equivalent of pupils in our camera is the diaphragm. For a given photograph, we must choose a certain fixed diaphragm (aperture) setting. Therefore, when photographing in situations like these, we must choose one of the following:

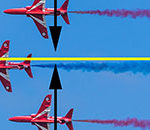

– Sacrifice the brightest parts by exposing correctly only the darkest ones. This way, we loose all the details in the brightest parts, which will be completely overexposed, but retain the details in the dark parts.

– The opposite of the above, with obvious advantages and disadvantages.

– Compromise, trying to average the exposition, but this will yield a loss of details both in the brightest and in the darkest parts, even though at a lesser degree.

From a technical point of view, this problem arises because the image sensor -be it an electronic CCD or a standard film- has a finite brightness resolution. For instance, a CCD has typically a maximum of 12 bits per RGB channel. If the differences in brightness within a specific scene need more than 12 bits, the sensor cannot accommodate the entire range in brightness. This leads to the technique we want to describe: the High Dynamic Range Imaging.

The dynamic range is defined as the brightness ratio between the brightest and the darkest point in an image. For a given photograph, our sensor gives us -let’s say- a maximum of 12 bits dynamic range. What about taking more than one picture of the same subject with different settings and then combining such pictures together? In one picture, we set the correct exposure for the shadows and, in another one, we set the correct exposure for the highlights. Therefore, all the details in our scene are clearly visible in at least one of the photos, regardless of their brightness. We can combine these pictures together so that all the details are visible in a unique image.

A simple solution would be to extend the number of bits per RGB channel as much as necessary. In standard jpeg images, for instance, each RGB channel has just 8 bits. But there is nothing to prevent us from setting up another standard using, for instance, 256 bits per channel. Actually, there are different standards letting 16 bits per channel, and they are rather common. There are other standards, too, letting more than that. This is done in scientific fields such as astronomy, where quantitative precise measurements must be done.

Therefore, the conceptual and practical solution to the high dynamic range imaging could be just like that: increase the bits per channel as necessary. However, another massive problem arises: how can we watch these images? The problem here is the limitation posed by both monitors and printers, as well. Video terminals and printers have no more than -let’s say- 10 bits per channel. They can’t show us more than that. If we look at the same image coded at 8 or 16 bits on our video terminal, we won’t probably spot any difference, but it is our terminal’s fault. The same holds true if we print those images.

This is the real problem today. How can we see with a 10 bit device a picture coded with 16 bits or even more? The discipline tackling this problem is named tone mapping and many research centers around the world are working hard on it. Scaling down the number of bits per channel used in an image to the number of bits per channel allowed by our monitors or printers is a very challenging theoretical topic. The cutting edge of the research takes into account even the human vision perception, far from being linear. A few software algorithms are already present and many others are continually proposed by research centers all over the world. In the next future, we should expect breaking technologies and sophisticated advances in this field of expertise.

Andrea Ghilardelli runs an online photo retouching service. To get your pictures beautifully retouched and for articles about photography, please visit his site: http://www.ilghila.com.

Like This Article?

Don't Miss The Next One!

Join over 100,000 photographers of all experience levels who receive our free photography tips and articles to stay current:

Leave a Reply